Transformers

Published:

Transformers

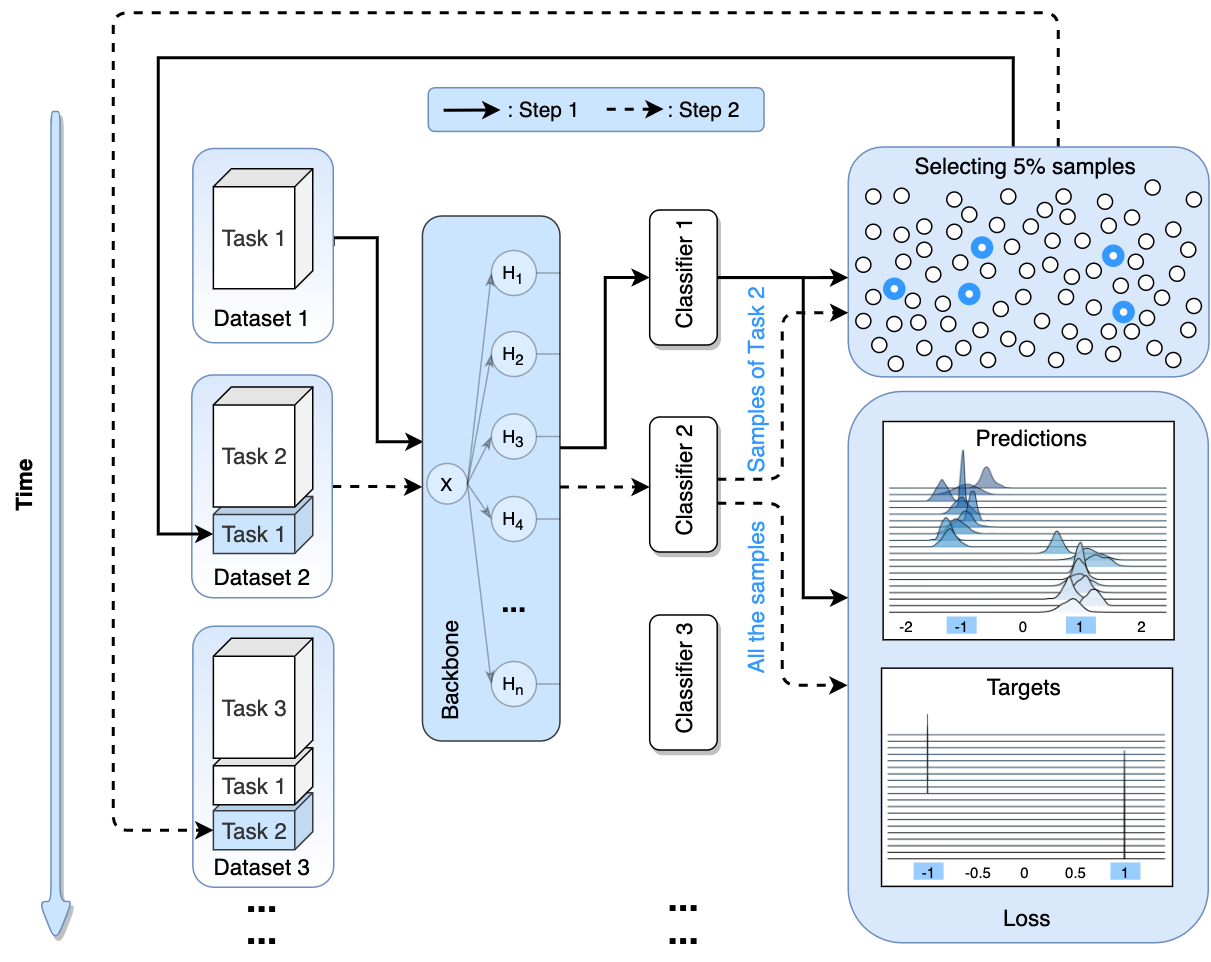

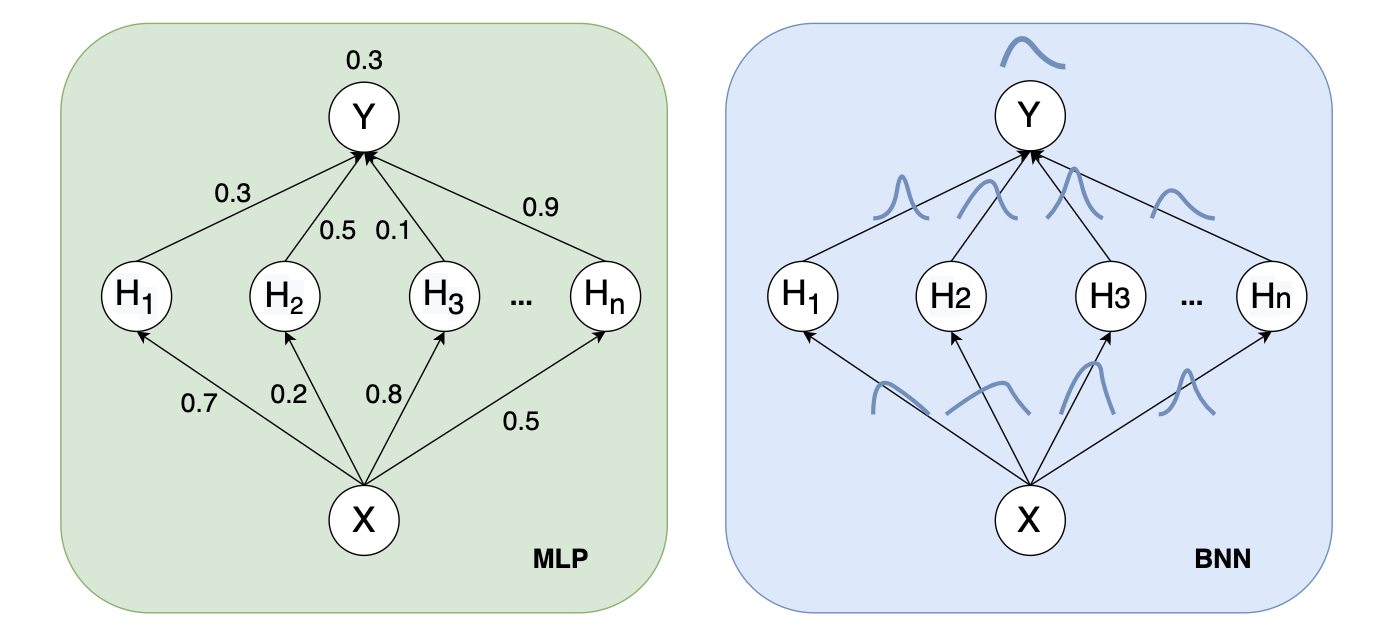

Introduction

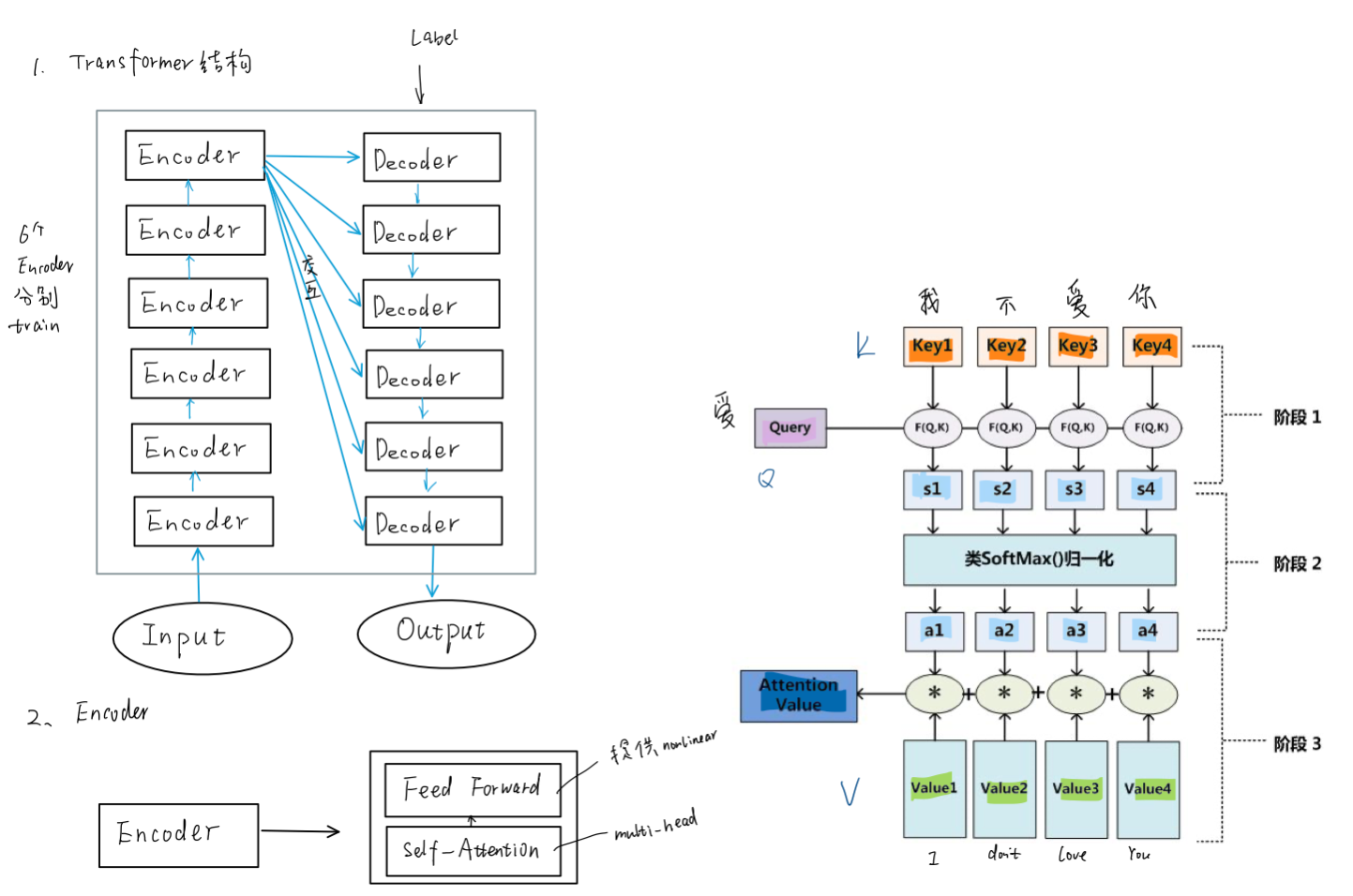

Transformer is a Self-Attenion + Feed Forward Neural Network. It has ResNet architecture inside of it.

Encoder

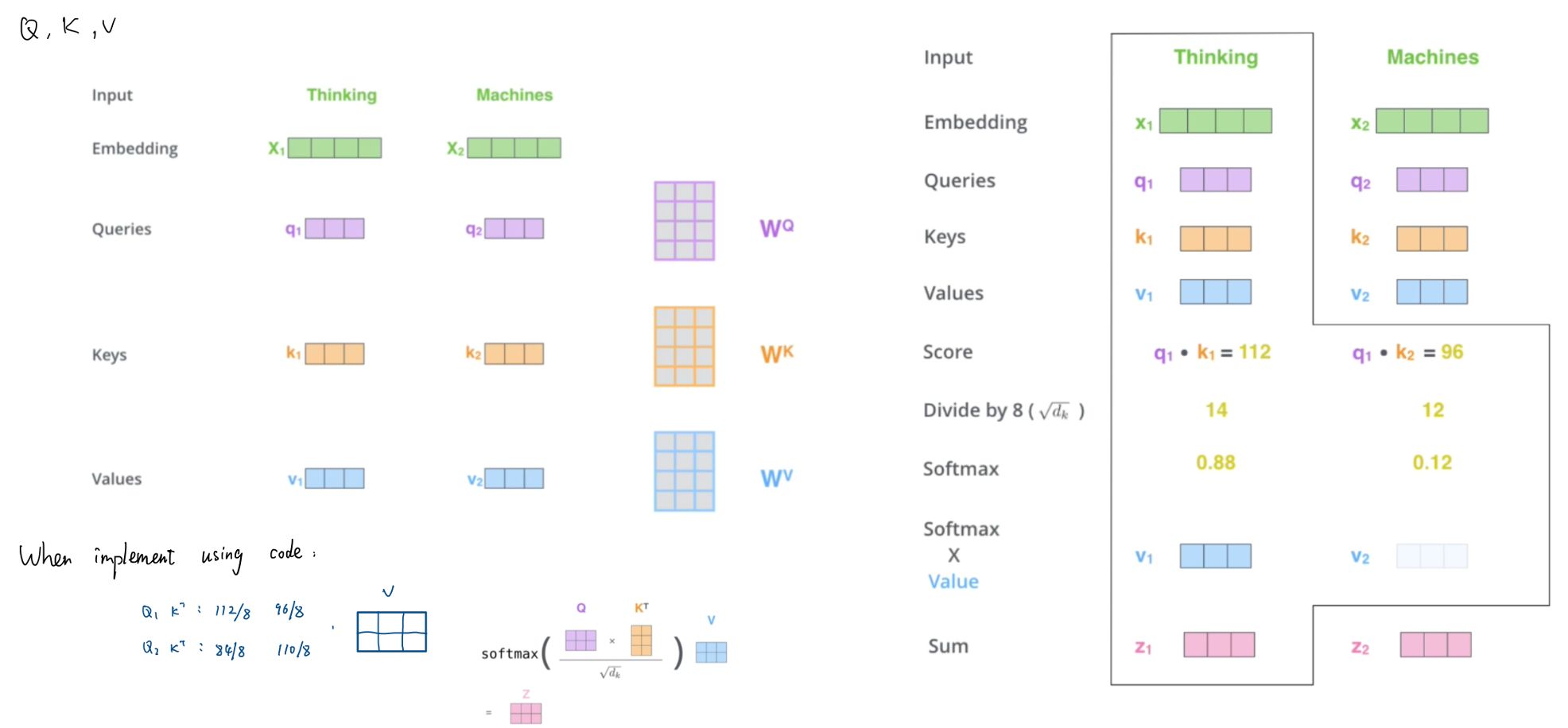

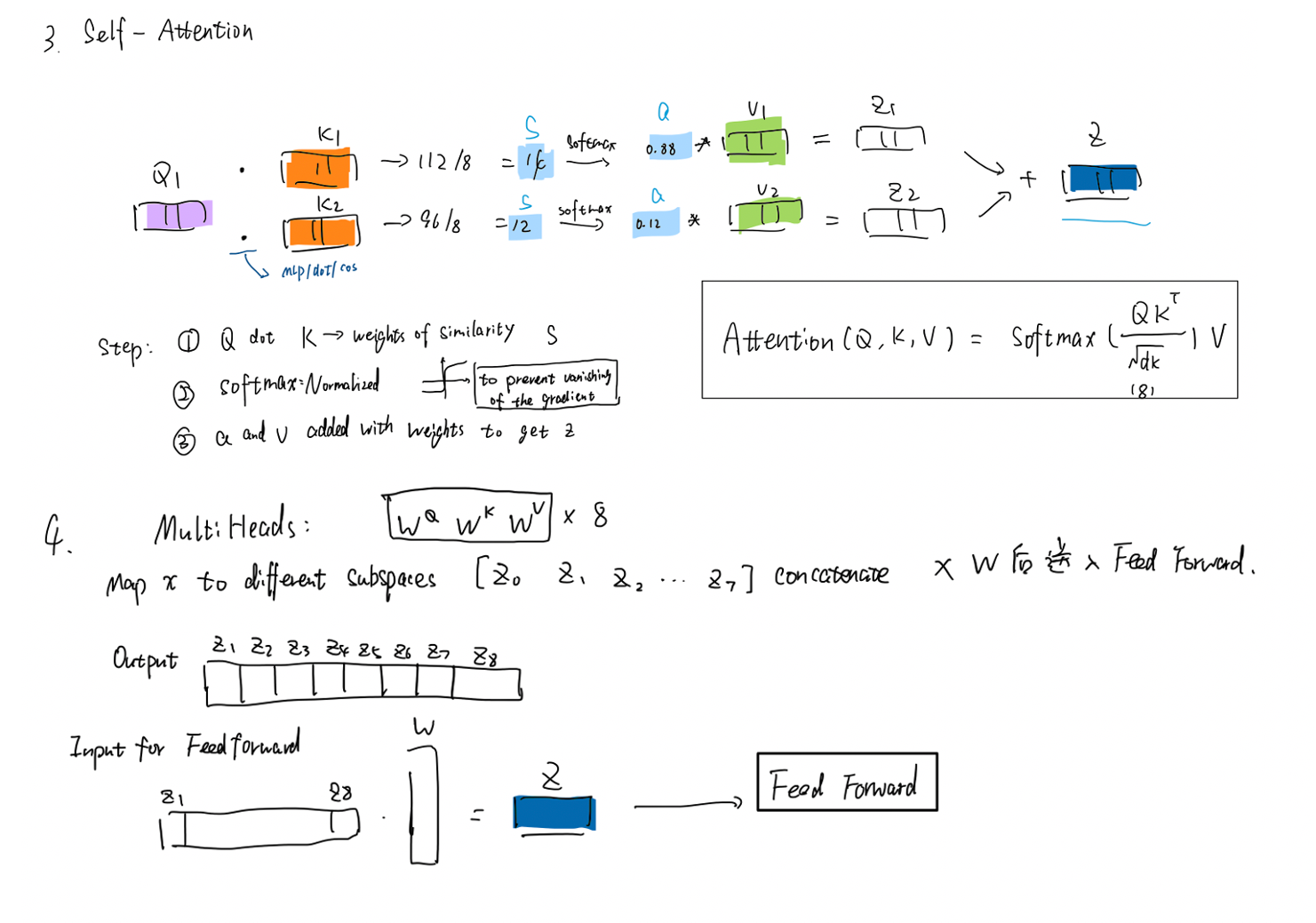

Self-Attention mechanism is just like soft addressing (Key-Value). It’s just the Value will contain information from all the tokens of the sequences.

Structure of Encoder

Wq, Wk and Wv

Attention mechanism = Soft addressing

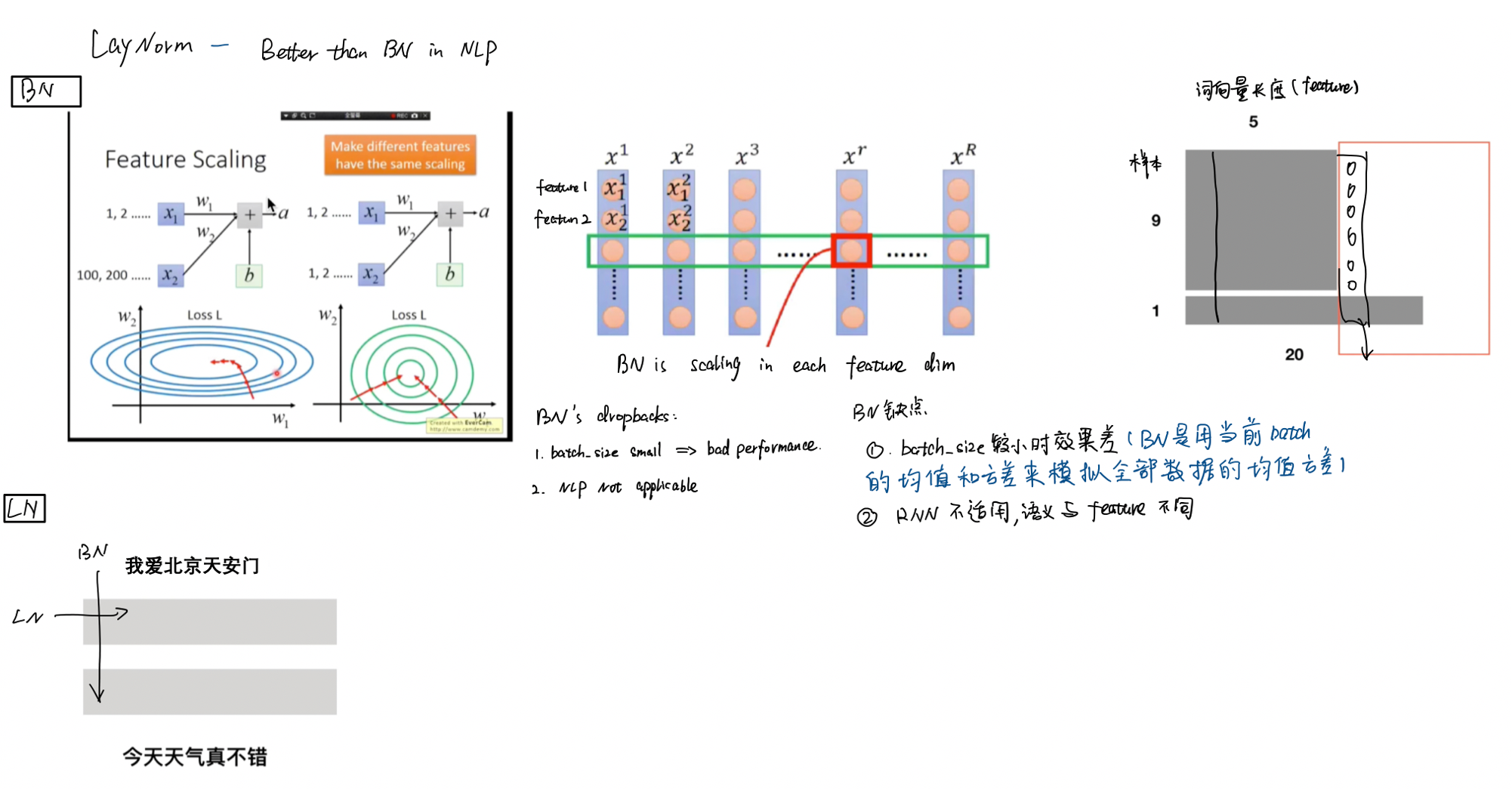

BN ==> LN

Layer Normalization is used in Transformers instead of Batch Normalization, because in NLP problem, the length of a sentence might be different, and BN scaling the data from the feature dimension, which has two problem:

- Some feature dimension will be 0 and the performance of BN is BAD.

- Small batch_size will also cause BAD performance.

- Scaling from the feature dimension is not reasonable when the sample is a sentence.

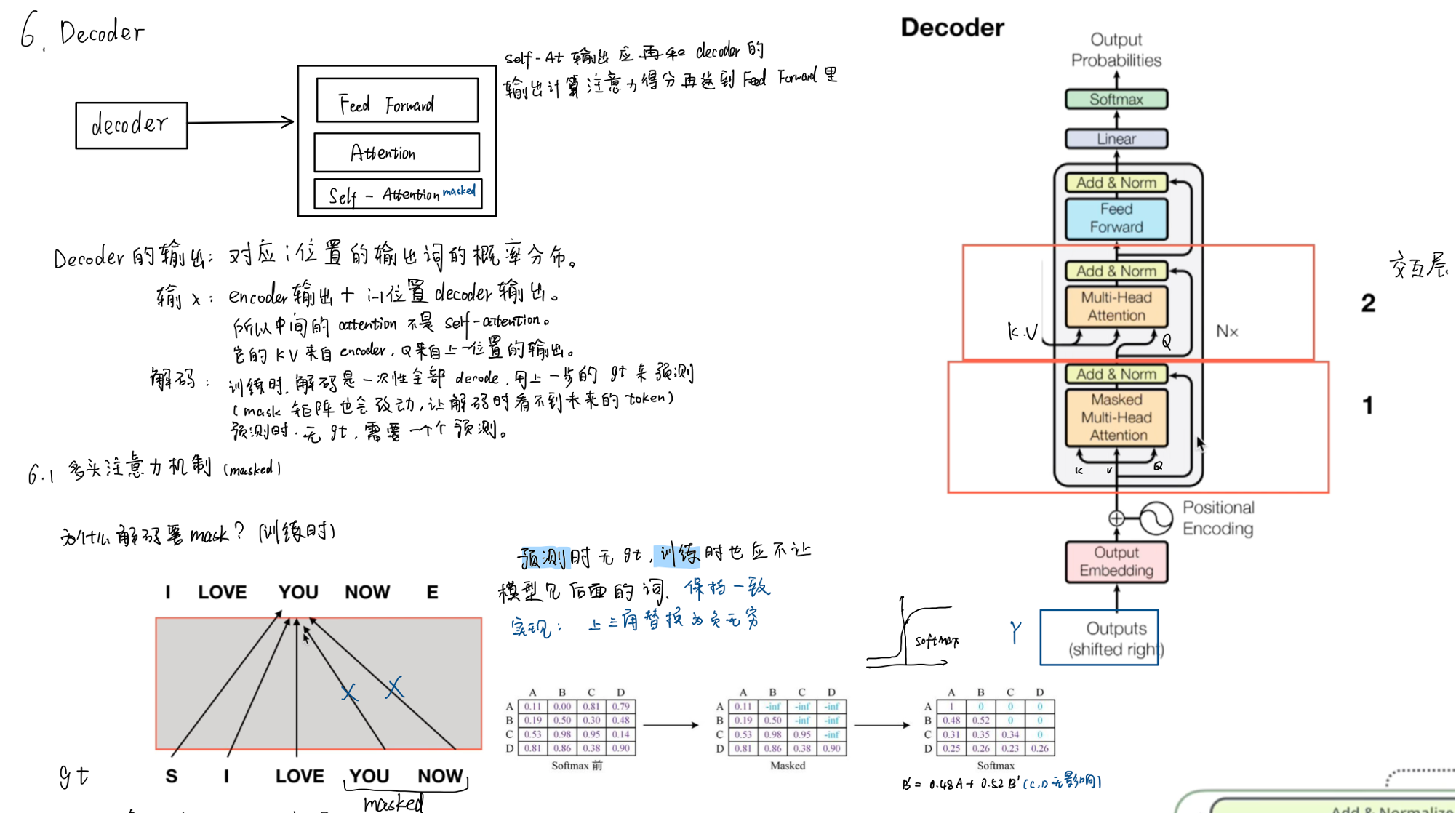

Decoder

Masked label is used in decoder to ensure that same data is fit in during training and test period.

The K and V for is from encoder.

Contact

The above is the a brief description of Transformers. If you encounter unclear or controversial issues, feel free to contact Leslie Wong.